What to Do When Google Decides Your SaaS Is Dangerous

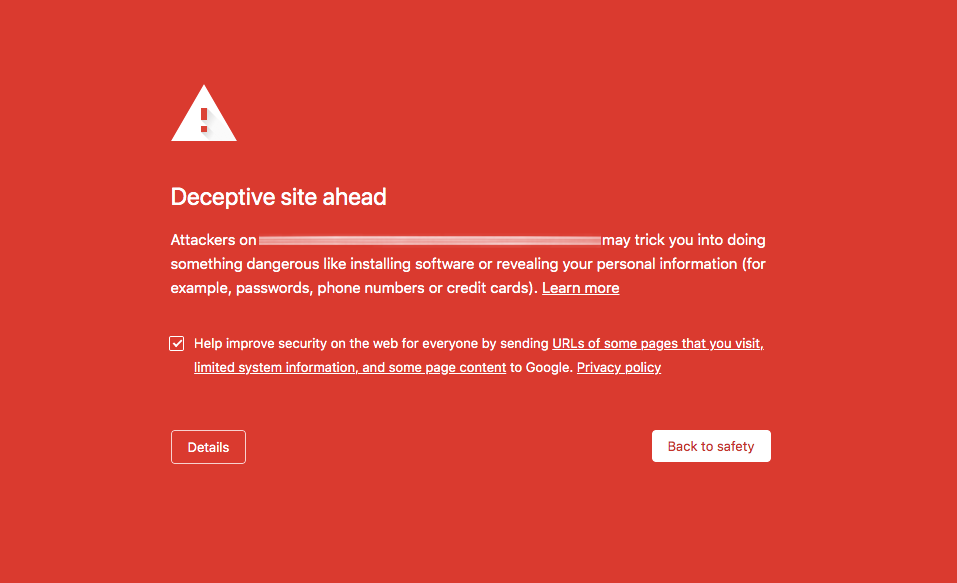

One day, out of the blue, we started getting customer complaints about a red screen that showed up whenever they tried to download an attachment.

But our own monitoring systems were showing that everything was healthy. Not a good sign.

So I logged in and tried to download an attachment. It was a screenshot I had already viewed once earlier in the day.

But this time, instead of the picture, I got the red screen of death.

Not a good sign at all.

So I ran a few tests:

- Was it only for image attachments? Nope, it was all attachments.

- Was it the folder that the attachment was in? Nope, it was all folders.

- Was it just Chrome? Nope, Firefox seemed to block it too!

The impact was significant: Google had blocked the entire S3 bucket and Firefox also seemed to respect that block. If any customer tried to download an attachment they received in Enchant, they would see a big red scary screen.

How these blocks happen

These red screens happen because the URL of your site got in to the Google Safe Browsing database.

One way this can happen is if your website was hacked and is actually serving malicious content. This is common with outdated blog software installs.

Another way this can happen is if you had some malicious content uploaded to your services. This is one of the risks of hosting user generated content.

In our case, it was through malicious content that arrived as part of an incoming email.

We're in the business of providing shared inbox software to other companies. So we receive lots of email on their behalf, which includes spam and malicious content.

But this isn't something new to us – the business of email includes dealing with the all sorts of bad actors. We already do a number of things to protect our customers:

- We strip out Javascript and executable CSS content from incoming emails.

- We proxy any images to prevent leaking browser or IP details of our customers.

- We scan emails to identify spammy or dangerous content.

In this case, none of that helped. Something slipped through and Google found it.

Digging deeper

But a bad attachment getting into our S3 bucket shouldn't be dangerous.

It will just sit there and eventually get deleted when the customer marks the email as spam or trash.

So what happened this time?

I logged into Google Search Console (aka Google Webmaster Tools), the tool provided by Google that gives you information that they have about your website.

I verified access to the S3 bucket (by manually uploading a file they provide) and went to the Security issues tab.

Aha!

On the Security issues tab, they gave me a path to a specific malicious file.

No problem, we removed the file from the bucket and submitted the website for review to Google to re-asses.

But HOW did they know the file was malicious?

This S3 bucket is private by default. The only way you can download from this bucket is if you were given a signed URL (which are issued by our app to it's users, and are only valid for a few minutes).

Google's own crawlers would just get a permission error.

So I was a little puzzled how they identified the file was malicious in the first place.

My best guess is that the customer actually downloaded that file. Their browser (Chrome, I assume) identified it was malicious and reported it to Google. Which has all sorts of privacy implications in itself.

But more importantly, this means that when they try to assess if we removed the file, they won't really be able to anyway. They'll just get a permission error.

Either way, I wasn't going to wait on Google.

Google is kind of famous for having poor/non-existent customer support, so I had no hope of getting a response in any reasonable time from them.

Our customers were getting bothered by a big red screen when they tried to download attachments and it was on us to fix it.

Google had blocked the entire S3 bucket subdomain. So we would have to serve attachments some other way.

And what guarantee do we have that they wouldn't just do the same to the next subdomain?

None, really. :(

Google has some automated process that is blocking an entire subdomain and we have no way to get expedited human action from Google about it. So we needed to figure out the best course of action to our protect our customers and our business.

We decided to put proxies in front of our S3 bucket.

To be clear: not one proxy, but many proxies.

The idea was simple. We would spread our attachment traffic across a bunch of proxies, all ultimately routing to the same S3 bucket. If any of them get into the Google Safe Browsing database, it would only affect a small percentage of attachments. And if it were to happen, we could fix things by dropping the flagged proxy from the rotation.

Given the simplicity of this concept, we were up and running with a minimal proxy within an hour or so and the big red screen was gone.

For what it's worth, Google did respond a couple of days later and removed our S3 bucket from the Google Safe Browsing database.

Think carefully about the domain from which you serve user generated content

Our initial thought was to setup proxies with a wildcard certificate, giving us unlimited subdomains to work with.

But Google has in the past blocked all subdomains on a domain, so we weren't so confident about taking that approach.

In our case, we decided to build our proxies using subdomains on domains that are too big to be blocked. There are actually lots of ways to accomplish this – Heroku, AWS API Gateway or the many alternatives to these.

Our final solution uses multiple API Gateway endpoints.

Dropbox has an interesting subdomain setup

Dropbox has a ton of user generated content.

If you're browsing their website, your address bar always says dropbox.com .. but if you preview a file, the file is actually being served from dropboxusercontent.com.

Not only that, but the subdomain is specific to the user account that uploaded the file. For example, previews come from uc123.previews.dropboxusercontent.com and downloads come from uc123.dl.dropboxusercontent.com (where uc123 is an account-specific identifier).

If Google blocks a specific subdomain, it only affects a specific user. If Google blocks the entire domain, it impacts dropboxusercontent.com and doesn't bring down dropbox.com.

We needed automated monitoring going forward

We should know our systems are misbehaving well before a customer notifies us. In this case, we didn't... and that was another thing we needed to fix.

Thankfully, Google does provide access to the Safe Browsing data via two APIs: Google Safe Browsing API and the Google Web Risk API.

So this was an easy fix - We setup a process that hits the API every few minutes and raises an alert if any our domains or proxies shows up on the list.

Take aways

- You need to take care how you serve user generated content, or it will become a liability.

- Host your user generated content on a separate domain, if possible.

- Segregate your customers on separate subdomains, if possible.

- If your customers don't directly control the content that is being hosted in their account, consider putting proxies in front of the user generated content domains to minimize the impact of any automated block by Google Safe Browsing.

- If you do find your domains the Google Safe Browsing database, use Google Search Console to identify the bad URLs and to submit back to Google for review.